predict() Function in Python:

In the field of data science, we must apply various machine learning models to data sets in order to train the data. We then attempt to predict the values for the untrained data.

This is when the predict() function comes into play.

The Python predict() function predicts the labels of data values based on the training model.

Syntax:

model.predict(data)

The predict() function only accepts one parameter, which is often the data to be tested.

It returns the labels of the data supplied as an argument based on the model’s learned or trained data.

Thus, the predict() method operates on top of the trained model, mapping and predicting the labels for the data to be tested using the learned label.

Implementation:

- Because the dataset contains categorical variables, use the pandas.get dummies() function to build dummies of the category features for the convenience of modeling.

- Use the train_test_split() function to divide the dataset into training and testing datasets.

# Import train_test_split from sklearn.model_selection using the import keyword.

from sklearn.model_selection import train_test_split

# Import os module using the import keyword

import os

# Import pandas module using the import keyword

import pandas

# Import dataset using read_csv() function by pasing the dataset name as

# an argument to it.

# Store it in a variable.

bike_dataset = pandas.read_csv("bikeDataset.csv")

# Make a copy of the original given dataset and store it in another variable.

bike = bike_dataset.copy()

# Give the columns to be updated list as static input and store it in a variable

categorical_column_updated = ['season', 'yr', 'mnth', 'weathersit', 'holiday']

bike = pandas.get_dummies(bike, columns=categorical_column_updated)

# separate the dependent and independent variables into two data frames.

X = bike.drop(['cnt'], axis=1)

Y = bike['cnt']

# Divide the dataset into 80 percent training and 20 percent testing.

X_train, X_test, Y_train, Y_test = train_test_split(

X, Y, test_size=.20, random_state=0)

predict() Function with Decision Tree Algorithm

Decision Tree Algorithm:

Decision Tree is a Supervised learning technique that may be used to solve classification and regression problems, however, it is most commonly used to solve classification problems. It is a tree-structured classifier in which internal nodes contain dataset characteristics, branches represent decision rules, and each leaf node represents the result.

A Decision tree has two nodes: the Decision Node and the Leaf Node. Decision nodes are used to make any decision and have several branches, whereas Leaf nodes are the result of those decisions and have no additional branches.

The decisions or tests are based on the characteristics of the given dataset.

It is a graphical representation of all possible solutions to a problem/decision given certain parameters.

It is named a decision tree because, like a tree, it begins at the root node and spreads from there.

Example:

Use the Decision Tree algorithm on the previously split dataset using the predict() function to predict the labels of the testing dataset based on the values predicted by the decision tree model.

#On our dataset, we're going to build a Decision Tree Model. from sklearn.tree import DecisionTreeRegressor #We pass max_depth as argument to decision Tree Regressor DT_model = DecisionTreeRegressor(max_depth=5).fit(X_train,Y_train) #Predictions based on data testing DT_prediction = DT_model.predict(X_test) #Print the value of prediction print(DT_prediction)

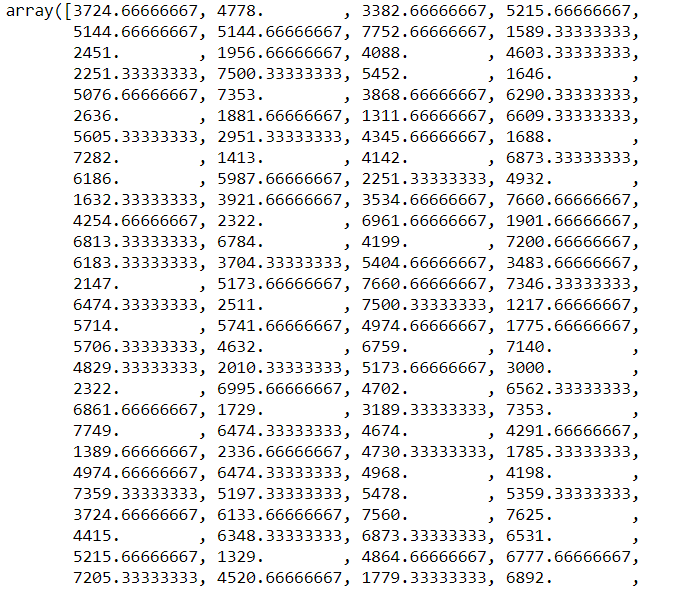

Output:

predict() Function with KNN Algorithm

K-Nearest Neighbor(KNN) Algorithm:

- K-Nearest Neighbor is a simple Machine Learning method that uses the Supervised Learning technique.

- The K-NN method assumes similarity between the new case/data and existing cases and places the new case in the category that is most similar to the existing categories.

- K-NN method stores all the available data and classifies a new data point based on the similarity. This means that when fresh data is generated, it may be quickly classified into a well-suited category using the K- NN algorithm.

- The K-NN approach can be used for both regression and classification, but it is more commonly utilized for classification tasks.

- K-NN is a non-parametric algorithm, which means it makes no assumptions about the underlying data.

- It is also termed a lazy learner algorithm since it does not learn from the training set instantly instead it saves the dataset and at the time of classification, it takes an action on the dataset.

- During the training phase, the KNN algorithm simply saves the dataset, and when new data is received, it classifies it into a category that is quite similar to the new data.

In this case, we used the Knn algorithm to make predictions from the dataset. On the training data, we used the KNeighborsRegressor() function.

In addition, we used the predict() function to make predictions on the testing dataset.

#On our dataset, we're going to build a KNN model. from sklearn.neighbors import KNeighborsRegressor #We pass n_neighborss as argument to KNeighborsRegressor KNN_model = KNeighborsRegressor(n_neighbors=3).fit(X_train,Y_train) #Predictions based on data testing KNN_predict = KNN_model.predict(X_test) #Print the value of prediction print(KNN_predict)

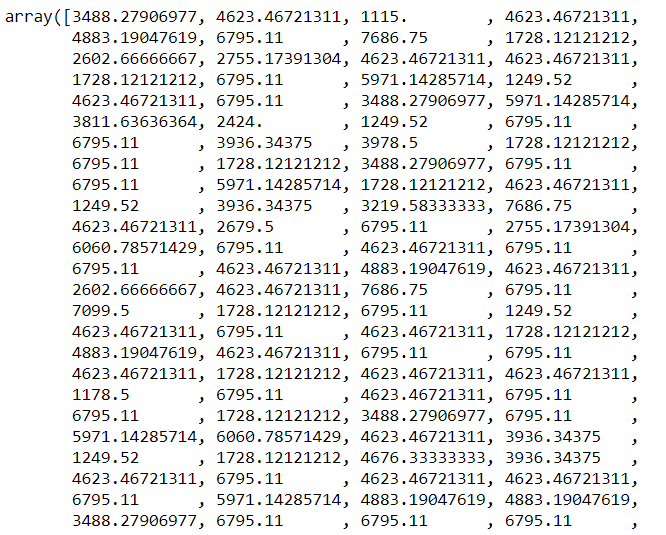

Output: